Juniper’s QFabric: The Dark Horse In The Datacenter Fabric Race?

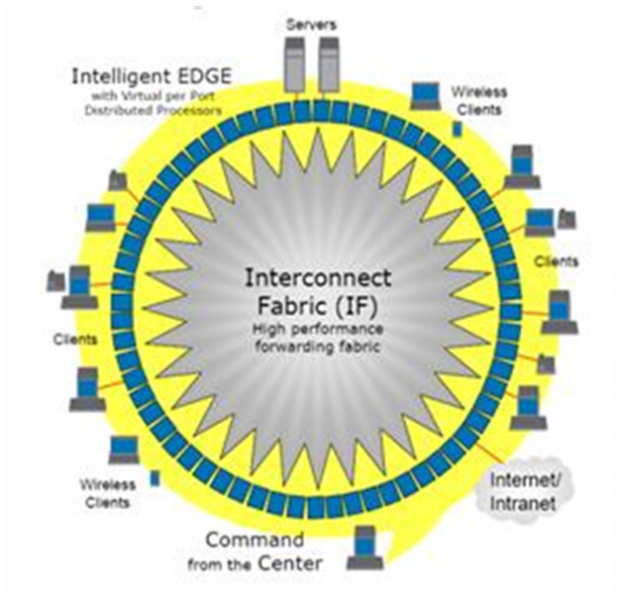

It’s been a few years since I was a disciple and evangelized for HP ProCurve’s Adaptive EDGE Architecture(AEA). Plain and simple, before the 3Com acquisition, it was HP ProCurve’s networking vision: the architecture philosophy created by John McHugh(once HP ProCurve’s VP/GM, currently the CMO of Brocade), Brice Clark (HP ProCurve Director of Strategy), and Paul Congdon (CTO of HP Networking) during a late-night brainstorming session. The trio conceived that network intelligence was going to move from the traditional enterprise core to the edge and be controlled by centralized policies. Policies based on company strategy and values would come from a policy manager and would be connected by high speed and resilient interconnect much like a carrier backbone (see Figure 1). As soon as users connected to the network, the edge would control them and deliver a customized set of advanced applications and services based on user identity, device, operating system, business needs, location, time, and business policies. This architecture would allow Infrastructure and Operation professionals to create an automated and dynamic platform to address the agility needed by businesses to remain relevant and competitive.

As the HP white paper introducing the EDGE said, “Ultimately, the ProCurve EDGE Architecture will enable highly available meshed networks, a grid of functionally uniform switching devices, to scale out to virtually unlimited dimensions and performance thanks to the distributed decision making of control to the edge.” Sadly, after John McHugh’s departure, HP buried the strategy in lieu of their converged infrastracture slogan: Change.

Figure 1 Adaptive EDGE Architecture

Source: HP, “The ProCurve Networking Adaptive EDGE Architecture”

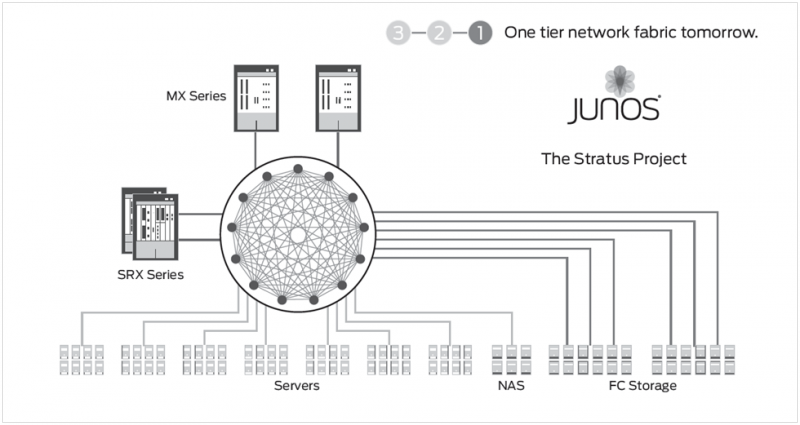

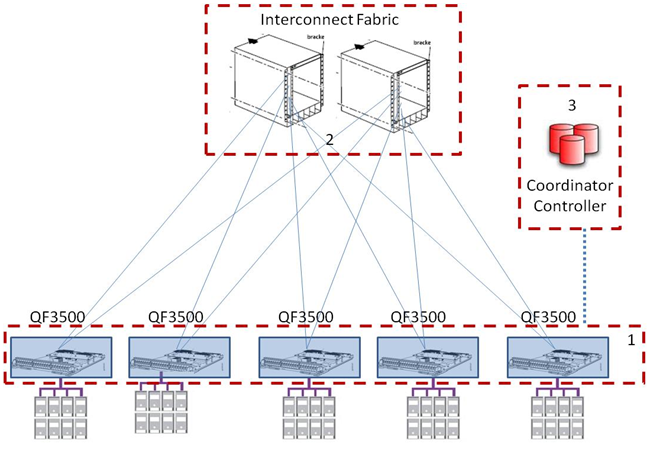

Fast forward 13 years to today, February 23: Juniper Networks releases a set of data center switches that enables their data center version of AEA, the Stratus Project(see Figure 2). The new top-of-rack (ToR) QFX3500 series switch, QFabricinterconnect, and controller deconstruct the traditional three-tiered network into a flatter network, a 1.5-tiered architecture. (This also assumes that you don’t count virtual switches, like VMware vSwitch, which I would consider to be a tier — but that’s another blog.) Picture Juniper’s Stratus Project as distributing the control and data plane to the edge, where servers connect with redundancy and optimization built into the system, and centralizing management functions; it’s a big switch: theoretically, take the line cards from EX8200, place them at the top of a rack (creating a pseudo fixed switch connecting to servers), tether the line cards back to the switch fabric (Part 2) for pure, fast, and resilient switching, and have all the line cards coordinate traffic information through a controller (Part 3). Juniper says this solution can interface with 6,000 servers. Parts 2 and 3 won’t be ready until later this year (see Figure 3).

Figure 2 “One tier network fabric tomorrow” — The Stratus Project

Figure 2 “One tier network fabric tomorrow” — The Stratus Project

Source: Juniper Networks, “Network Fabrics for the Modern Data Center”

Figure 3 Juniper’s Big Switch Data Center Network Architecture

Figure 3 Juniper’s Big Switch Data Center Network Architecture

Source: Forrester Research

Even though Juniper still needs to deliver on parts 2 and 3, this product launch moves them up and puts Juniper Networks back in the Data Center Derby with Arista, Avaya, Brocade, and Cisco. Juniper’s vision overlaps layer 3 and 2 which keeps packets from running across fabric for something that can be done locally thereby eliminating waste. The design allows partitioning of network by workgroup too. QFabric’s strongest differentiation is the single management plane that makes all the components behave as one switch without introducing a single failure point. Juniper’s QFabric drives simplicity into the data center, a value long overdue. No one vendor is offering all aspects of the next generation data center network outlined in The Data Center Network Evolution: Five Reasons This Isn't Your Dad's Network, but they are getting close.

My bottom line take: Juniper’s announcement is a big deal and Infrastructure & Operations pros should pay attention. Why? It’s an interesting approach to the datacenter fabric, which is the last hurdle in unlocking cloud economics in your virtual datacenter. But make sure you consider competing approaches, and Brocade’s in particular — the current leader in this race. But regardless, 2011 is the year to build your fabric business case. Not doing so will risk setting your datacenter strategy back 5 years.

Juniper announced a strong horse, but this race is only in the third furlong — with plenty of excitement before the finish line. What’s your take? Is Juniper the dark horse that will win the fabric race?